Output checking helps to keep private data safe

- Posted:

- Written by:

- Categories:

This article is part of a series: The Past, Present and Future of OpenSAFELY

- The past, present and future of OpenSAFELY: Introduction

- How OpenSAFELY works

- Co-pilots give newcomers a helping hand

- Standard tools for data preparation, and federated analytics

- Output checking helps to keep private data safe

- The legal basis: ethics, controls and building trust

- Earning and maintaining trust: PPIE and more

- How OpenSAFELY began

- Consequences of COVID-19 and the role of vaccination

- “No other platform comes close”

- The 'unreal' speed of OpenSAFELY

- Using OpenSAFELY to fight antimicrobial resistance

- OpenSAFELY and antibiotics

- Using OpenSAFELY to carry out a randomised trial

- The OpenSAFELY Collaborative

- Some reflections about funding

- What's next for OpenSAFELY?

The whole point of OpenSAFELY is that it enables researchers to make use of private health data, without compromising anyone’s privacy.

Researchers don’t get direct access to the raw data, but they can still use it to produce research outputs in the form of graphs and tables. These outputs contain aggregated summaries of individual patient data which can provide useful insights without revealing private personal information.

But even summary outputs could accidentally disclose information that might identify individuals or groups of people. Output checking is the work we do to minimise the risk of that happening.

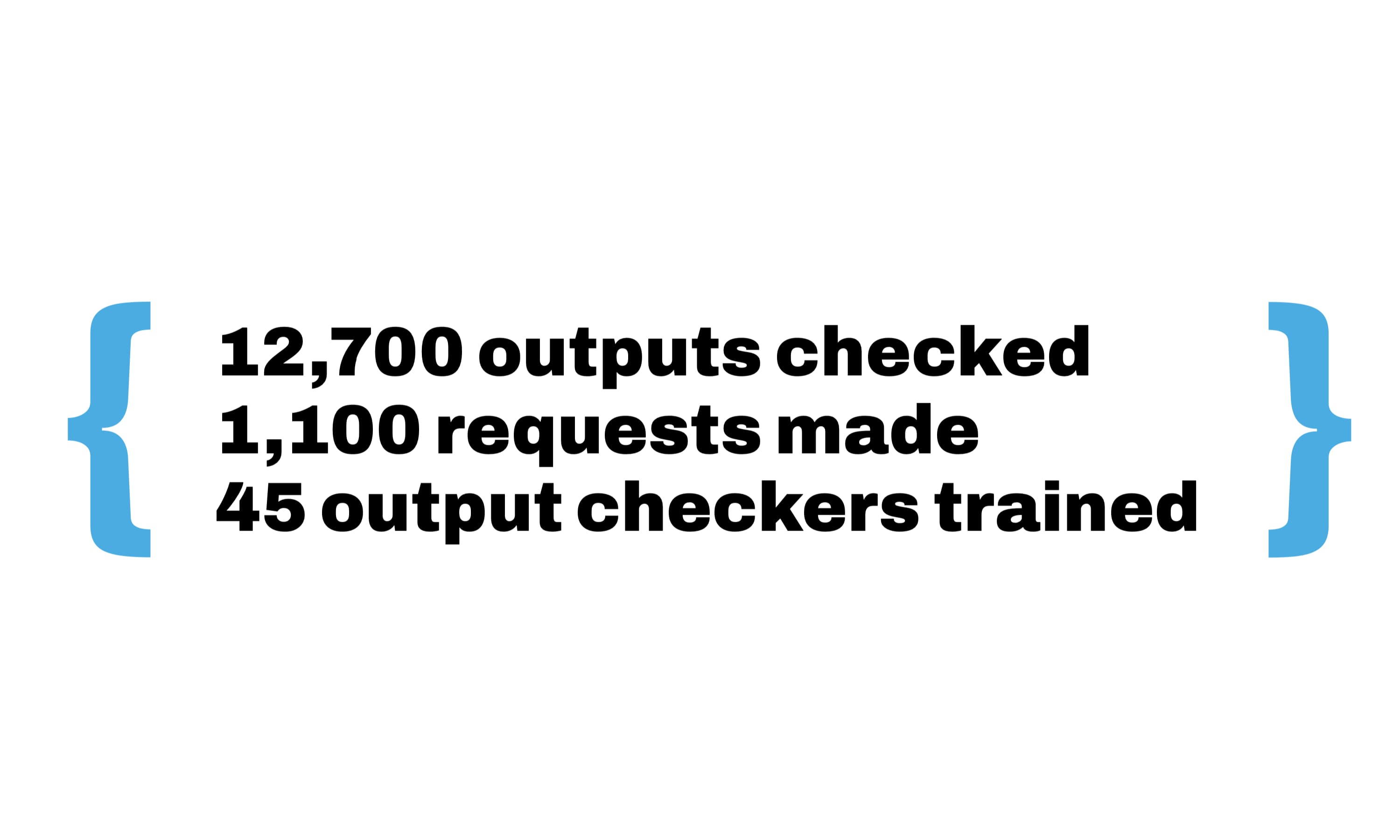

Output checkers are experienced data science professionals. Current and former members of the output checking team include people from the Bennett Institute, the London School of Hygiene and Tropical Medicine and the University of Bristol. Even with their data science backgrounds, all checkers go through formal training and have to pass an exam to complete it. That training is provided by colleagues from the Data Research, Access and Governance Network at the University of the West of England.

An output checker’s job is to examine research outputs from OpenSAFELY, and judge whether or not they pose any risk of disclosing identifiable private information. Just like the researchers, the output checkers don’t have direct access to the raw data – but they do have the experience and expertise to spot the kinds of outputs that could be problematic.

It’s important that output checking happens, and that it isn’t done in a rush. No-one wants to delay scientific progress, either. We aim to strike a sensible balance, and the majority of requests submitted by researchers have been checked in fewer than seven days. Most checks are done in under 30 minutes.

The process for output checking looks like this:

- Having run a job within OpenSAFELY, researchers use Airlock to view the initial outputs within the secure environment. They are expected to carry out disclosure checking on these themselves, before passing anything up to the output checking service. (All OpenSAFELY users have to complete training on this before they’re allowed to start using the platform.) Then they’ll fill in a request form, in which they explain what each output file shows, and any disclosure controls they’ve already applied.

- The request is tracked as an issue on GitHub, and two output checkers are assigned to take a look.

- Each output checker gets access to the same output results that the researcher saw, and marks each file with a grade: approved, approved subject to change, or rejected.

- The reviews are sent back to the researcher who proceeds accordingly: if any files were marked as “approved subject to change”, the output checker will explain what change is necessary, and the researcher will have to re-submit for another output check after making the changes.

- Once the outputs are approved by both output checkers, they are released from the secure environment to the researchers, who can continue with any further analysis.